Writing high performance parallel codes is difficult, and one of the reasons for this is the fact that we still predominantly use sequential languages, such as C, C++, or Fortran, with some add on support for parallelism such as MPI or OpenMP. There have been numerous attempts to solve this, and many parallel programming languages and libraries developed, but a major challenge is that they tend to either provide programmability, or performance, but not both. Put simply, whilst the abstractions that many parallel languages provide enable the quick and easy development of code, they also impose specific assumptions around key factors such as data decomposition, the form of communication, or distribution of work. In many cases it can then be very difficult or even impossible for HPC programmers to go in and further tune these, which is really important for performance. Conversely, those technologies that provide a rich API for tuning and many options, can require a steep learning curve, and be easily misused resulting in poor performance.

Writing high performance parallel codes is difficult, and one of the reasons for this is the fact that we still predominantly use sequential languages, such as C, C++, or Fortran, with some add on support for parallelism such as MPI or OpenMP. There have been numerous attempts to solve this, and many parallel programming languages and libraries developed, but a major challenge is that they tend to either provide programmability, or performance, but not both. Put simply, whilst the abstractions that many parallel languages provide enable the quick and easy development of code, they also impose specific assumptions around key factors such as data decomposition, the form of communication, or distribution of work. In many cases it can then be very difficult or even impossible for HPC programmers to go in and further tune these, which is really important for performance. Conversely, those technologies that provide a rich API for tuning and many options, can require a steep learning curve, and be easily misused resulting in poor performance.

Type oriented programming

We think that a mixed-mode approach to abstraction is called for, where in the absence of further information the compiler makes some default decisions, but these can be overridden by the programmer taking explicit control and deciding the nitty gritty details. We believed that the type system is an ideal place to achieve this, where all the expressivity and complexity associated with parallelism is contained within types, and applied to variables or even snippets of code. Types are combined together into a type chain and this is illustrated below, where variable m is typed to be a character with other attributes associated with it. Precedence is from right to left, so if there is any conflicting behaviours between types, then the semantics of the righter most one will apply. Types can be provided with arguments, which can include nested type chains, for instance A::B::C in the example below, which provides further specialisation to the parent type.

Testing the idea with the Mesham parallel programming language

We developed an imperative language called Mesham to explore and evaluate this idea. More details on the language can be found here which includes examples and benchmarks. The compiler and runtime is hosted on Github (here).

We developed an imperative language called Mesham to explore and evaluate this idea. More details on the language can be found here which includes examples and benchmarks. The compiler and runtime is hosted on Github (here).

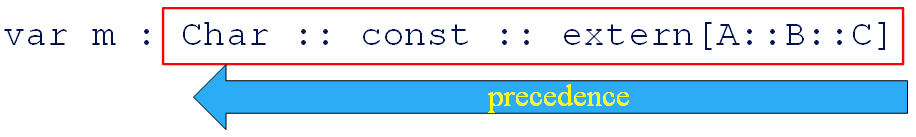

The core language itself is extremely simple, with the majority of support for parallelism provided via the type system. By default this is Partitioned Global Address Space (PGAS), and more specifically is the communication mechanism implemented by the relevant types as a default if no further control is provided. An example of this is illustrated on the left, where variables a and b are both integers and, via the type system, are allocated to the memory space of different processes. The assignment at line three will result in the copying of the value in b into that of a, and because they are on different processes this involves communication. Using such a default approach, the exact form of communication is abstracted (in-fact it is RMA), with the programmer being aware that once the line is executed the assignment has completed. If they were to change the types, for instance allocating b on process one, then the underlying assignment will automatically change. It is however possible to further control this, for instance line three could be replaced by (a :: channel[3,1]):=b which will set up an explicit point to point channel between the two processes and use this for assignment, which could improve performance. In this manner, other options such as asynchronous communication, can be applied. The Mesham runtime currently supports the implementation of parallelism via MPI, pthreads, and a hybrid mixed mode approach.

Application to arrays

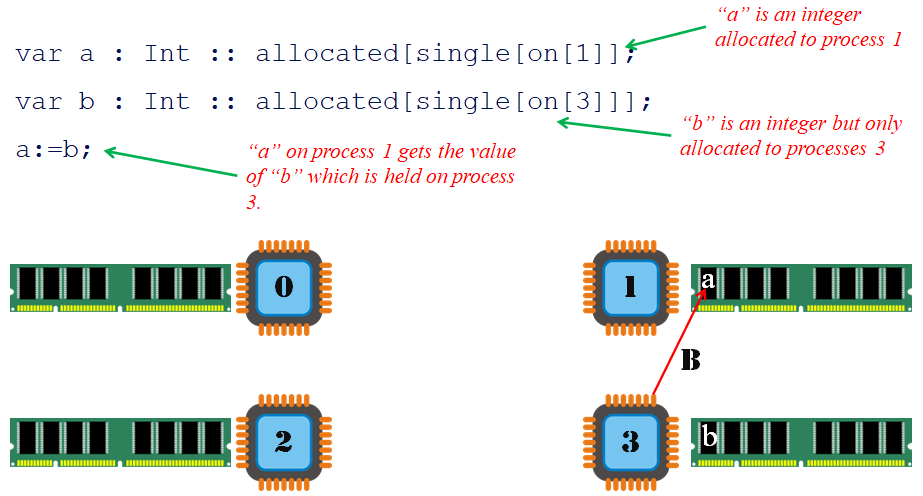

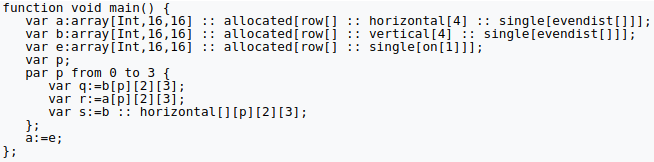

In Mesham we also provide types that describe parallel array data structures, partitioning these according to some scheme and then distributing the resulting blocks across processes. For instance in the diagram on the right, blocks associated with a partitioned array are distributed evenly, cycling around the processes if necessary. Crucially, the types take care of what is allocated where, and abstracts all the control and communication required. In the code example below, which involves 4 processors, there are three arrays declared, a, b and e. Array a is horizontally partitioned into 4 blocks, evenly distributed amongst the processors, whilst b is vertically partitioned into 4 blocks and also evenly distributed amongst the processors. Array e is located on processor 1 only. All arrays are allocated row major. In the par loop, which runs the iterations concurrently across processes, variables q, r and s are declared and assigned. Because b is partitioned vertically and a horizontally, variable q is the value at b‘s block memory location 11, whilst r is the value at a’s block memory location 35. On line 9, variable s is the value at b‘s block memory location 50 because, just for this expression, the programmer has used the horizontal type to take a horizontal view of the distributed array. In line 11 the assignment a:=e results in a scatter as per the definition of its declared type.

In Mesham we also provide types that describe parallel array data structures, partitioning these according to some scheme and then distributing the resulting blocks across processes. For instance in the diagram on the right, blocks associated with a partitioned array are distributed evenly, cycling around the processes if necessary. Crucially, the types take care of what is allocated where, and abstracts all the control and communication required. In the code example below, which involves 4 processors, there are three arrays declared, a, b and e. Array a is horizontally partitioned into 4 blocks, evenly distributed amongst the processors, whilst b is vertically partitioned into 4 blocks and also evenly distributed amongst the processors. Array e is located on processor 1 only. All arrays are allocated row major. In the par loop, which runs the iterations concurrently across processes, variables q, r and s are declared and assigned. Because b is partitioned vertically and a horizontally, variable q is the value at b‘s block memory location 11, whilst r is the value at a’s block memory location 35. On line 9, variable s is the value at b‘s block memory location 50 because, just for this expression, the programmer has used the horizontal type to take a horizontal view of the distributed array. In line 11 the assignment a:=e results in a scatter as per the definition of its declared type.

The programmer can also experiment with important parallel decisions which govern performance, for instance the form of distribution, simply by changing the type. In the majority of cases no further code changes are necessary, and this enables the HPC programmer to develop a working, simple version initially, safe in the knowledge that they can then easily optimise this if needed later on. We also provide types that abstract stencil based computations, which will implement halos to optimise communication, and types can easily be plugged in by developers to provide additional functionality such as new distribution schemes.

The programmer can also experiment with important parallel decisions which govern performance, for instance the form of distribution, simply by changing the type. In the majority of cases no further code changes are necessary, and this enables the HPC programmer to develop a working, simple version initially, safe in the knowledge that they can then easily optimise this if needed later on. We also provide types that abstract stencil based computations, which will implement halos to optimise communication, and types can easily be plugged in by developers to provide additional functionality such as new distribution schemes.

Task-based type oriented programming

Until this point we focused on applying types to data, namely variables, but there are also potential benefits to typing snippets of code too. One of my PhD students, Ludovic, explored this idea in the first year of his PhD research and this enabled the explicit typing of Mesham functions. These were then implemented as parallel tasks, with types controlling how they are scheduled, executed, and dependencies. This worked well, and the crucial point we were trying to explore was whether a mixed-mode approach to abstraction also helps with task-based parallelism, which we believe it does.

Related publications

- Type oriented programming for task based parallelism. Brown, N., Capelli, L. & Bull, J. In proceedings of the Type-driven Development Workshop (more info)

- Type oriented parallel programming for Exascale. Brown, N. In Advances in engineering software (more info)

- A type-oriented Graph500 benchmark. Brown, N. In Lecture Notes in Computer Science vol. 8488 (more info)

- Applying Type Oriented Programming to the PGAS Memory Model. Brown, N. In 7th International Conference on PGAS Programming Models (more info)

- Type oriented parallel programming for Exascale. Brown, N. In proceedings of the First International Conference on Exascale Applications and Software (more info)