This page provides a summary of the different research areas that I am interested in and summarises some of my activities. The results of these projects can be seen in my publications.

Novel architectures for HPC

A major component of my research is exploring the role that new, upcoming, hardware architectures can play in accelerating high performance computing codes. Often orders of magnitude more energy efficient than existing technologies, the challenge is often how to exploit these most effectively. Whether it is processing workloads centrally on supercomputers in a data centre, or out at the edge, requirements are being driven by scientific and societal ambition that uniquely suit these new types of hardware.

RISC-V for HPC

RISC-V is an open ISA and with over 10 billion RISC-V devices in existence, it has enjoyed phenomenal growth since it was developed over a decade ago. I am PI of the EPSRC funded RISC-V testbed which aims to explore and popularise RISC-V in the context of HPC. The modular nature of RISC-V, along with the ability to develop application coupled specific accelerators, means that in the future we will likely have bespoke HPC hardware.

RISC-V is an open ISA and with over 10 billion RISC-V devices in existence, it has enjoyed phenomenal growth since it was developed over a decade ago. I am PI of the EPSRC funded RISC-V testbed which aims to explore and popularise RISC-V in the context of HPC. The modular nature of RISC-V, along with the ability to develop application coupled specific accelerators, means that in the future we will likely have bespoke HPC hardware.

In addition to physical RISC-V CPUs, which we are benchmarking and porting HPC codes and libraries too, I am also exploring soft-core RISC-V CPUs. These are software descriptions of CPUs which can be used to configure FPGAs which then function as a CPU, given the openness around RISC-V a plethora of open source RISC-V soft-core CPUs are available. A major benefit is that these can be more experimental, and cutting edge, than physical CPUs and can also provide aspects such as bespoke accelerators. My interest here is in the integration of these into CPU designs for HPC and the software support.

RISC-V is an extremely exciting technology which will likely revolutionise computing, and in addition to my research activities I am chair of the RISC-V International HPC SIG and lead organiser for the RISC-V HPC workshop series at ISC and SC, as well as involved in the same workshop at HPC Asia 24 and numerous RISC-V BoFs.

FPGAs and CGRAs

More capable hardware make FPGAs a serious proposition for HPC like never before. However, in order to gain good (or even acceptable) performance we need to rethink our algorithms and move from an imperative, Von Neumann, style to a dataflow design. It is this area, the design and development of new dataflow techniques, that I find most interesting within the context of FPGAs and have led the porting of numerous HPC codes onto FPGAs across several domains (e.g. finance, CFD, atmospheric modelling). I was a Co-I on the EPSRC funded FPGA testbed, which aimed to make FPGAs more accessible for HPC developers.

More capable hardware make FPGAs a serious proposition for HPC like never before. However, in order to gain good (or even acceptable) performance we need to rethink our algorithms and move from an imperative, Von Neumann, style to a dataflow design. It is this area, the design and development of new dataflow techniques, that I find most interesting within the context of FPGAs and have led the porting of numerous HPC codes onto FPGAs across several domains (e.g. finance, CFD, atmospheric modelling). I was a Co-I on the EPSRC funded FPGA testbed, which aimed to make FPGAs more accessible for HPC developers.

CGRAs provide coarse grained reconfigurability and, typically providing processing cores within a flexible interconnect, are becoming more popular. I am PI of an EPSRC funded CGRA project, which has explored accelerating HPC codes on the Cerebras CS-2 and AMD Xilinx AI engines. As part of this research I undertook the first study in leveraging AI engines for HPC workloads.

I have been awarded a personal research fellowship by the Royal Society of Edinburgh to explore greener weather forecasting on supercomputers. Focussing on Met Office workloads and running in 2024, this focusses on two major themes. The first is to leverage FPGAs in the network for undertaking in-situ data post-processing and reduction, a task that is currently done by CPUs, and secondly exploring the role of AMD Xilinx AI engines in accelerating the CASIM microphysics model. It is my hope that the specialist properties of this hardware will provide an increase in performance at a significantly reduced energy draw.

I have been awarded a personal research fellowship by the Royal Society of Edinburgh to explore greener weather forecasting on supercomputers. Focussing on Met Office workloads and running in 2024, this focusses on two major themes. The first is to leverage FPGAs in the network for undertaking in-situ data post-processing and reduction, a task that is currently done by CPUs, and secondly exploring the role of AMD Xilinx AI engines in accelerating the CASIM microphysics model. It is my hope that the specialist properties of this hardware will provide an increase in performance at a significantly reduced energy draw.

HPC compilers and programming models

A major motivation of my research is the grand challenge of how we can enable scientists and engineers to most effectively program current and future generation HPC machines. Given the large degree of parallelism, and heterogeneous nature of our supercomputers, this currently requires deep expertise. Put simply, scientists want to worry about their problem rather than the tricky, low level details of parallelism, and indeed if we do not solve this problem then the benefit we gain from exascale supercomputers will likely be limited.

xDSL: The cross domain DSL ecosystem

I am a Co-I on, and knowledge exchange coordinator of, the EPSRC funded xDSL project. The fundamental issue we look to address is that Domain Specific Languages (DSLs) are one way in which we can solve the HPC programming challenge, however underlying DSL compiler ecosystems tend to be heavily siloed which heightens user risk and results in maintenance burdens. To this end we are developing a common ecosystem for DSLs based around MLIR/LLVM, enabling DSLs to become a thin abstraction layer atop this existing well supported technology.

I am a Co-I on, and knowledge exchange coordinator of, the EPSRC funded xDSL project. The fundamental issue we look to address is that Domain Specific Languages (DSLs) are one way in which we can solve the HPC programming challenge, however underlying DSL compiler ecosystems tend to be heavily siloed which heightens user risk and results in maintenance burdens. To this end we are developing a common ecosystem for DSLs based around MLIR/LLVM, enabling DSLs to become a thin abstraction layer atop this existing well supported technology.

Using two DSLs to drive our experiments, we are not only developing a Python-based MLIR compiler framework but furthermore numerous HPC focussed dialects. Furthermore, we have found that by leveraging MLIR it is often possible to consolidate many of these domain specific compilers with general purpose ones. For instance, we have conducted several experiments improving the performance of Flang by leveraging domain specific optimisations during compilation.

As part of this I am also exploring solving some of the grand challenges around programming FPGAs and CGRAs. FPGA programmers must currently restructure their algorithm into a dataflow style, however our research enables far more automation and for the compiler to take codes designed for CPU execution and restructure these for dataflow architectures, delivering an order of magnitude better performance than existing approaches. As part of this one of my PhD students brought Flang to FPGAs, enabling Fortran High Level Synthesis programming of FPGAs (which significantly enhances productivity for HPC developers as one need not first port their codes to C++).

Productive performance programming on low SWaP CPUs

![]() I am interested in how to most effectively program low Size Weight and Power (SWaP) architectures and developed ePython, the world’s smallest Python implementation. This was initially targeted at the Epiphany many-core CPU but has since been extended to support the MicroBlaze and PicoRV RISC-V CPUs. Working with one of my PhD students, we extended this into the Vipera framework for dynamic languages on low SWaP, and using a novel compilation approach we are able to provide near, and in some cases exceeding, native C code performance. Furthermore, our approach enables execution of unlimited code sizes with unlimited data sizes, requiring around only 4KB of memory on the device.

I am interested in how to most effectively program low Size Weight and Power (SWaP) architectures and developed ePython, the world’s smallest Python implementation. This was initially targeted at the Epiphany many-core CPU but has since been extended to support the MicroBlaze and PicoRV RISC-V CPUs. Working with one of my PhD students, we extended this into the Vipera framework for dynamic languages on low SWaP, and using a novel compilation approach we are able to provide near, and in some cases exceeding, native C code performance. Furthermore, our approach enables execution of unlimited code sizes with unlimited data sizes, requiring around only 4KB of memory on the device.

Urgent supercomputing

I led the interactive supercomputing work package on the H2020 FETHPC VESTEC project and was responsible for ten deliverables. This project explored the fusion of real-time data with HPC for running urgent real-time workloads to better inform disaster response. Our case studies included forest fires and mosquito borne diseases, and my work package, which comprised around 100 months of effort over 7 partners, developed a technology providing federation of these interactive workloads across a range of supercomputers.

I led the interactive supercomputing work package on the H2020 FETHPC VESTEC project and was responsible for ten deliverables. This project explored the fusion of real-time data with HPC for running urgent real-time workloads to better inform disaster response. Our case studies included forest fires and mosquito borne diseases, and my work package, which comprised around 100 months of effort over 7 partners, developed a technology providing federation of these interactive workloads across a range of supercomputers.

As part of this project I began the UrgentHPC workshop series that ran at SC19, SC20, SC21, and SC22. At the end of the project this initiative merged with the interactive HPC workshop series, and since then I have been involved in organising further workshops at ISC22 and ISC23, as well as a BoF at SC23.

HPC code acceleration

I have worked with numerous organisations, developing and optimising their HPC codes, with my interests in developing new techniques for improving performance. For instance, I was the main developer of the Met Office NERC Cloud model (MONC) between 2014 and 2015, which is the Met Office’s high resolution atmospheric model which is used by them to explore weather phenomena at small scales and develop new parameterisations for their main weather forecasting model.

I have worked with numerous organisations, developing and optimising their HPC codes, with my interests in developing new techniques for improving performance. For instance, I was the main developer of the Met Office NERC Cloud model (MONC) between 2014 and 2015, which is the Met Office’s high resolution atmospheric model which is used by them to explore weather phenomena at small scales and develop new parameterisations for their main weather forecasting model.

The previous model was capable of modelling only around 10 million grid points, whereas this work increased capability to supporting simulation of tens of billions of grid points over many thousand CPU cores. In addition to the computation, a major challenge was in the refinement of raw data to generate higher level information which the scientists are interested in. I developed a novel in-situ approach, where CPU cores are shared between computation and data analysis, enabling an order of magnitude increase in the data processing that was possible. Since the initial development I have been a Co-I on several projects that have further enhanced the model.

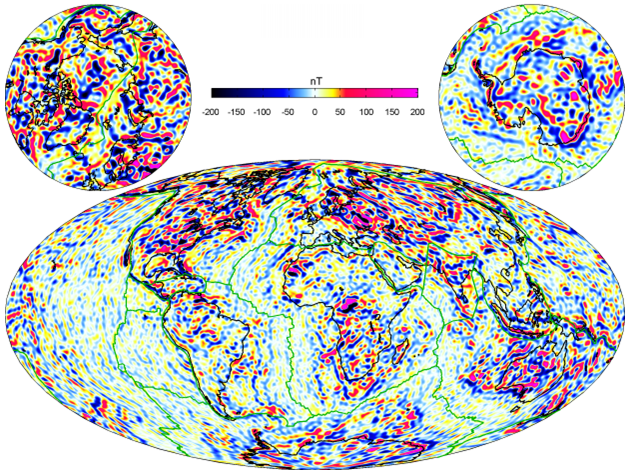

I have been a Co-I on a couple of projects with the British Geological Survey, optimising their geomagnetic models for modern supercomputers. For example their Model of the Earth’s Magnetic Environment (MEME) code predicts the changing geomagnetic environment but that the challenge was that this was only capable of handling a tiny fraction of the raw data which is available from swarm satellites and ground stations. In order to improve the accuracy of the model, and enable exploration of more challenging polar latitudes, it was desirable to increase the size of input data set and resolution. Fundamentally, underlying the core of this optimisation work was the development of a novel technique for parallel assembly of the matrix of equations, which had been extremely costly in the existing code base. Ultimately this work resulted in around a ten times increase in the size of data that could be handled by the code at a reduction of over 100 times the runtime.

I have been a Co-I on a couple of projects with the British Geological Survey, optimising their geomagnetic models for modern supercomputers. For example their Model of the Earth’s Magnetic Environment (MEME) code predicts the changing geomagnetic environment but that the challenge was that this was only capable of handling a tiny fraction of the raw data which is available from swarm satellites and ground stations. In order to improve the accuracy of the model, and enable exploration of more challenging polar latitudes, it was desirable to increase the size of input data set and resolution. Fundamentally, underlying the core of this optimisation work was the development of a novel technique for parallel assembly of the matrix of equations, which had been extremely costly in the existing code base. Ultimately this work resulted in around a ten times increase in the size of data that could be handled by the code at a reduction of over 100 times the runtime.

Machine learning

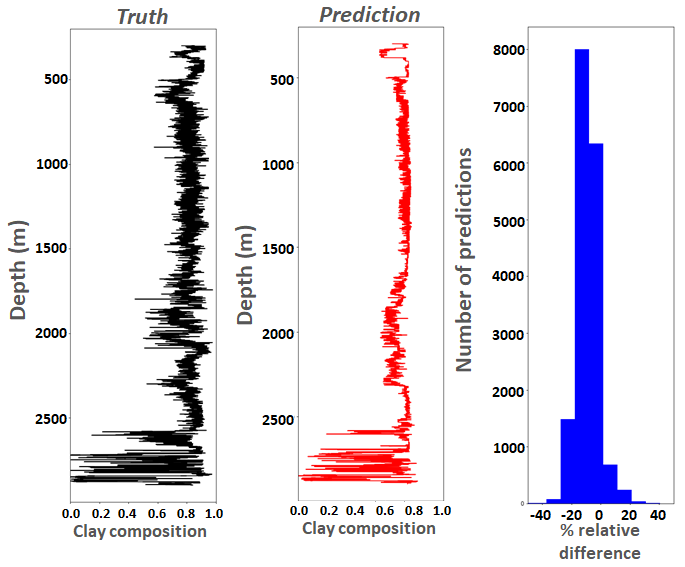

Whilst most of my interest around machine learning is in how best we can leveraging novel architectures at the edge for ML workloads, I was PI of a project which explored the use of ML for optimising petrophysical workflows of well log data. The objective was to enable better use of the human because manual interpretation of each well took around 7 days and there were thousands of wells that required processing. However, the petrophysicist was using their knowledge and expertise to identify and extract specific patterns in the raw data, and the hypothesis was that, based upon a large enough training set, it would be possible to train an ML model to identify these patterns. We leveraged boosted trees and deep neural networks, ultimately reduceing the overall well processing time down to around two days. After the project concluded the IP was sold to PGS which resulted in a follow on project where we explored tuning the ML algorithms for their workloads.

Whilst most of my interest around machine learning is in how best we can leveraging novel architectures at the edge for ML workloads, I was PI of a project which explored the use of ML for optimising petrophysical workflows of well log data. The objective was to enable better use of the human because manual interpretation of each well took around 7 days and there were thousands of wells that required processing. However, the petrophysicist was using their knowledge and expertise to identify and extract specific patterns in the raw data, and the hypothesis was that, based upon a large enough training set, it would be possible to train an ML model to identify these patterns. We leveraged boosted trees and deep neural networks, ultimately reduceing the overall well processing time down to around two days. After the project concluded the IP was sold to PGS which resulted in a follow on project where we explored tuning the ML algorithms for their workloads.