When we developed the in-situ data analytics of MONC, we adopted an asynchronous approach to communication. This involved providing Fortran subroutines and a unique identifier as arguments to communication calls and, once that communication completed, then the corresponding subroutine was executed in a thread with the resulting data. This worked well, however was a source of considerable complexity in the code itself, and felt like such should be supported by some underlying communications library. It was our belief that this could apply more generally, and work well as an approach to writing highly scalable, asynchronous codes.

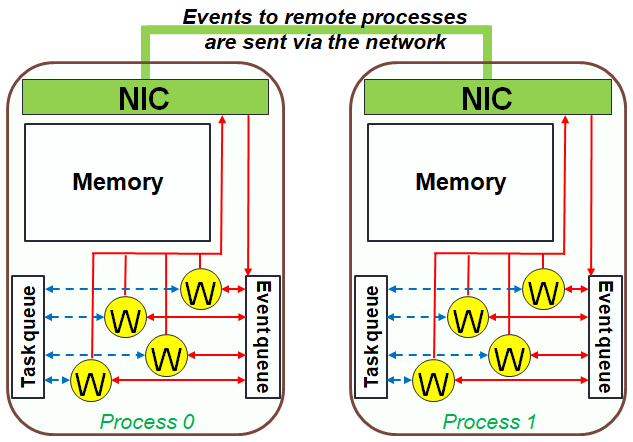

We therefore developed a library called Event Driven Asynchronous Tasks (EDAT), which aims to provide the programmer with a realistic distributed memory view of their machine, but still allow them to write task based codes. This is BSD licenced and available on Github (here), with the programmer’s architectural view illustrated in the diagram to the left. Programmers understand that memory spaces are distributed and connected together by a network, with each process containing a number of workers (typically one per core) which physically execute tasks. Tasks are submitted by the programmer, which comprises of the function to run as well as a list of dependencies that must be met before that task can then be executed by a worker. Dependencies are events, which originate from some process and are labelled with an Event IDentifier (EID.) Events are sent from one process to another (it can be the same process) and this is the major way in which tasks and processes interact. In addition to being labelled with the EID, events may also contain some optional payload data that the tasks can process. Whilst this might look a bit like a message, such as that of MPI, the way in which events are sent and delivered to tasks is entirely abstracted from the programmer, with tasks being activated once all their events arrive.

We therefore developed a library called Event Driven Asynchronous Tasks (EDAT), which aims to provide the programmer with a realistic distributed memory view of their machine, but still allow them to write task based codes. This is BSD licenced and available on Github (here), with the programmer’s architectural view illustrated in the diagram to the left. Programmers understand that memory spaces are distributed and connected together by a network, with each process containing a number of workers (typically one per core) which physically execute tasks. Tasks are submitted by the programmer, which comprises of the function to run as well as a list of dependencies that must be met before that task can then be executed by a worker. Dependencies are events, which originate from some process and are labelled with an Event IDentifier (EID.) Events are sent from one process to another (it can be the same process) and this is the major way in which tasks and processes interact. In addition to being labelled with the EID, events may also contain some optional payload data that the tasks can process. Whilst this might look a bit like a message, such as that of MPI, the way in which events are sent and delivered to tasks is entirely abstracted from the programmer, with tasks being activated once all their events arrive.

It was our hypothesis that, by splitting up parallel codes into independent tasks that only interact via their dependencies (events), then this would result in a far more loosely coupled and asynchronous application that could improve performance and scalability. Furthermore, based on this abstract model the programmer can focus on what interacts with what, rather than how they interact.

A simple example

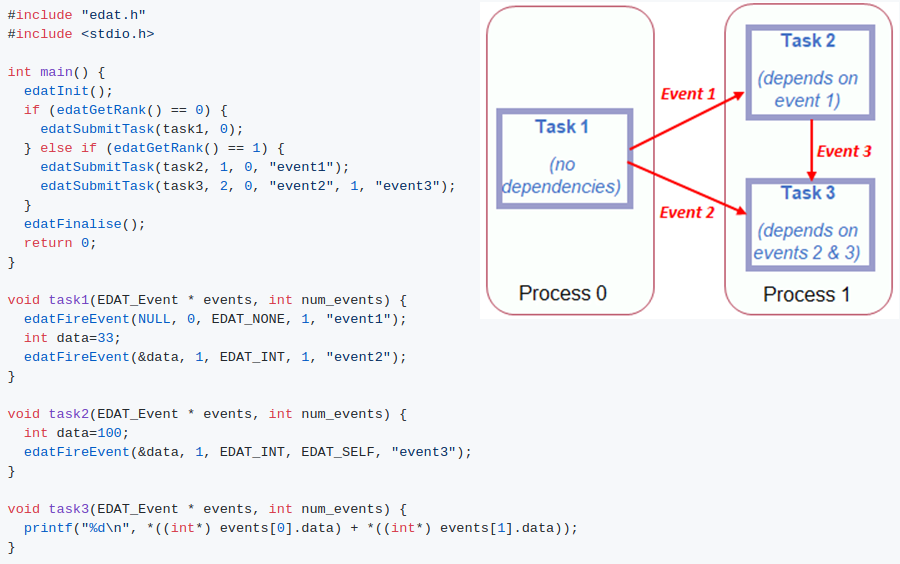

The code below illustrates a simple example, where three tasks are submitted. Task 1 has no dependencies and as such is able to run immediately (on process 0), whereas task 2 and 3 are submitted on process 1 but unable to run until their dependencies are met. Task 1 fires two events to process 1, event1 and event2, the later containing some payload data. When this first event arrives at process 1, the second task is then eligible for execution by a worker, which will fire an event to itself which combined with event2 results in the third task being eligible for execution. The idea here is that the programmer is aware of the distributed nature of the target machine, and organising their parallel application as a set of loosely coupled tasks which interact via explicit events. There is the ability to control what tasks are scheduled where, and the understanding that sending events over the network will incur greater overhead than doing so locally.

In many task based frameworks, such as OpenMP 4.0, OmpSs, StarPU, and HPX, interoperability between shared memory and distributed memory technologies is a challenge when one wishes to scale their task-based codes. There is a significant increase in complexity when one addresses distributed memory architectures, including challenges of optimal data placement, efficient communication and task locality. Broadly, there are two common approaches; either to place the burden of these challenges onto the runtime, and keep it simple for the programmer but with them reliant on the runtime making good decisions (for instance OmpSs has adopted a directory/cache) or place the burden on the programmer and require them to explicitly mix the task-based and distributed memory (such as MPI) technologies. Arguably the task-based technology that has best navigated this challenge is Charm++, where conceptually objects contain a number of methods that can be invoked remotely by calling such methods remotely. However Charm++ combines the task-based model with that of the OO programming paradigm, and whilst this exhibits some benefits, crucially the programmer must write their code Object Oriented (OO) style in C++. This often requires completely rethinking and redesigning their application in OO C++, porting over in a \emph{big-bang approach}, which is not the case for our approach. EDAT is language agnostic (in addition to C and C++ we have bindings for Fortran and Python), and can be applied incrementally to existing code-bases, which we think is of significant benefit.

The proof of the pudding is in the eating

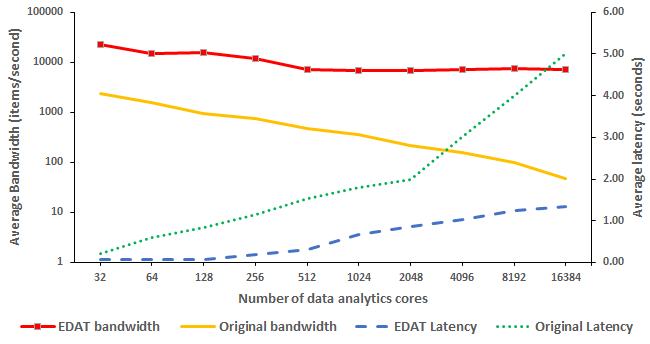

After developing EDAT we then went back to the in-situ data analytics of MONC and replaced the bespoke active messaging style communications in the model with this task-based library. The code size was reduced by around 10%, with a further approximately 2% of the code requiring modifications to interact with EDAT. We then undertook performance tests on a Cray XC30 with a standard, stratus cloud test-case and a 1 to 1 ratio between computational and data analytics cores. The graph on the left illustrates the results of these tests, measuring both the average bandwidth (number of items per second that can be processed, ignoring the file write time), and latency (average amount of time it takes for each raw data item to be processed and produce a resulting value, again ignoring the time writing to disk) against the number of data analytics cores. In this experiment we are weak scaling, due to the 1:1 mapping of analytic and compute cores, up to 32768 cores in total with half performing data analytics and half computation. As we increase the number of data analytics cores the overall work and communication increases because more values globally needing processed for each final result. It can be seen that the bandwidth afforded by the EDAT approach is an order of magnitude greater than the original, bespoke, MONC code. The average latency is also significantly smaller for the EDAT implementation in comparison to the original code.

After developing EDAT we then went back to the in-situ data analytics of MONC and replaced the bespoke active messaging style communications in the model with this task-based library. The code size was reduced by around 10%, with a further approximately 2% of the code requiring modifications to interact with EDAT. We then undertook performance tests on a Cray XC30 with a standard, stratus cloud test-case and a 1 to 1 ratio between computational and data analytics cores. The graph on the left illustrates the results of these tests, measuring both the average bandwidth (number of items per second that can be processed, ignoring the file write time), and latency (average amount of time it takes for each raw data item to be processed and produce a resulting value, again ignoring the time writing to disk) against the number of data analytics cores. In this experiment we are weak scaling, due to the 1:1 mapping of analytic and compute cores, up to 32768 cores in total with half performing data analytics and half computation. As we increase the number of data analytics cores the overall work and communication increases because more values globally needing processed for each final result. It can be seen that the bandwidth afforded by the EDAT approach is an order of magnitude greater than the original, bespoke, MONC code. The average latency is also significantly smaller for the EDAT implementation in comparison to the original code.

Resilience via ACID

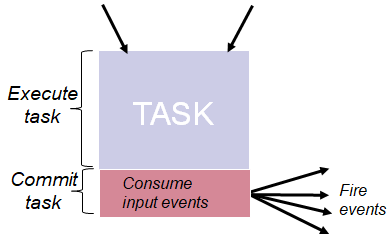

Resilience of HPC codes is a topic which many believe will become more important as HPC machines grow significantly in size and the mean time between failure reduces. In the database world, transactions are ACID compliant, meaning that they are Atomic, Consistent, Isolated, and Durable, effectively resulting in the fact that if system failure occurs mid-transaction then the system is still left in a meaningful state. We realised that the same ideas could be applied to EDAT tasks if they are pure and only transition the global state via the firing of events. The EDAT library was modified such that the running of a task comprises of two stages, execution and commit, where any events fired during execution are stored, and only physically sent during the commit phase. This means that if hardware were to fail mid-task, then the task can simply be re-run without any adverse impact on the overall state of the program. Furthermore, as the task is pure then when it comes to correctness the fired events are the only output, and effectively all that matters. Hence a task can be executed a number of times on different hardware and the events bit compared, if they miss-match then the runtime knows that at-least one of the tasks was erroneous. In this resilience mode then the runtime state (all events and submitted tasks) must be stored, we provide two implementations of this, an in-memory ledger or a file-based ledger. Both of these do involve some overhead, but this is much lower for the in-memory ledger compared to the file-based ledger, although the in-memory approach does offer less overall protection.

Resilience of HPC codes is a topic which many believe will become more important as HPC machines grow significantly in size and the mean time between failure reduces. In the database world, transactions are ACID compliant, meaning that they are Atomic, Consistent, Isolated, and Durable, effectively resulting in the fact that if system failure occurs mid-transaction then the system is still left in a meaningful state. We realised that the same ideas could be applied to EDAT tasks if they are pure and only transition the global state via the firing of events. The EDAT library was modified such that the running of a task comprises of two stages, execution and commit, where any events fired during execution are stored, and only physically sent during the commit phase. This means that if hardware were to fail mid-task, then the task can simply be re-run without any adverse impact on the overall state of the program. Furthermore, as the task is pure then when it comes to correctness the fired events are the only output, and effectively all that matters. Hence a task can be executed a number of times on different hardware and the events bit compared, if they miss-match then the runtime knows that at-least one of the tasks was erroneous. In this resilience mode then the runtime state (all events and submitted tasks) must be stored, we provide two implementations of this, an in-memory ledger or a file-based ledger. Both of these do involve some overhead, but this is much lower for the in-memory ledger compared to the file-based ledger, although the in-memory approach does offer less overall protection.